Early Days of Computing

Lab: Evolution of Computing

Video Runtime: 26:14

Before there were programmable computers, humans were the only computers. Processes were broken down into sequential steps, which a pool of people worked on, hour-after-hour, day-after-day. This process was labor-intensive and prone to error. Mathematicians sought to find a more efficient means of simulating the human computer.

During this period, our world of computing progressed, as sequential tasks were finally captured into a form that a machine could process. This period brought the world relay-logic, the precursor to modern day computer circuits. People worked as programmers, converting sequential instructions into the form machines and circuits could execute. The first commercial computer was delivered in 1951. This period was a major turning point in our computing history—one which shaped our field and roles.

Founder of Computer Science and Modern Computing – Alan Turing

This discussion begins with the founder of computer science and modern computing, Alan Turing. Every computer science and software engineering student is required to learn about Turing, as computing began here. In 1936, Turing invented the Turing machine. You can read his paper titled, “On Computable Numbers, with an Application to the Entscheidungsproblem”. All modern-day computers are based upon the Turing machine. Therefore, we need to spend some time discussing it.

This discussion begins with the founder of computer science and modern computing, Alan Turing. Every computer science and software engineering student is required to learn about Turing, as computing began here. In 1936, Turing invented the Turing machine. You can read his paper titled, “On Computable Numbers, with an Application to the Entscheidungsproblem”. All modern-day computers are based upon the Turing machine. Therefore, we need to spend some time discussing it.

What is the Entscheidungsproblem?

In the early 1920s, German mathematician David Hilbert challenged the world to convert the human computer into provable, repetitive, reliable, and consistent mathematical expressions. He essentially wanted to arrive at a true or false state.

Thought Experiment

A true statement equates to a numeric value of one. In electricity, a true value equates to a state of on. Conversely, a false value, which is a numeric value of zero, equates to an off state in electrical circuits.

Think about this challenge. How can you capture deductive reasoning into discernible proofs in maths? Can every mathematical problem be solved in this manner? Could we capture the logical steps to problem-solving into the form of math?

He called this challenge Entscheidungsproblem, which is the “decision problem.”

Turing Machine

In Turing’s paper, he set off to tackle computable numbers and the Entscheidungsproblem. He disproved Hilbert’s challenge by showing there is no absolute method which can prove or disprove a problem in all cases. What changed our world was his proof, i.e. the Turing machine.

What is the Turing Machine?

The Turing machine solves any computing problem which can be translated into sequential and logical steps. Stop and think about the impact of this discovery. If you can describe how to solve a problem, then his machine can solve it. The Turing machine converted the human machine into a mechanical machine.

How does it achieve this?

Think of his machine as a very simple robot with the ability to move from side-to-side to specific points on a long paper tape. Now imagine this tape having small boxes in a row all the way down the length of the tape. Within each box it can have a single 1, 0, or nothing. That’s it. The robot slides along to a specific box (or state), reads the value, fetches the instructions for that box location (code), and then does what it says. It can leave the value as is or change its state (just like in memory). Then, it would move to the position that particular code said to go to for the next state. This process continues until it receives a halt command.

The Take-away

We will go into more depth about Turing’s machine and how it works in CS 0100 Big Picture of the Computer and the Web. For now, remember that Turing gave us a fundamental understanding that code and data can be represented the same way in a machine.

Meet John von Neumann

In 1945, John von Neumann took Turing’s ideas and developed the machine’s architecture. He designed a central core to fetch both the data and code out of memory, execute the code (perform the maths), store the results, and then repeat the process until the halt command was given. This may not sound amazing now, but in its day, it was revolutionary.

His architecture included what he called, “conditional control transfer,” or subroutines in today’s terms. Think about Turing’s machine. Each time it came to a box, it fetched the instructions for that location, i.e. it went into another chunk of code or subroutine. Instead of a linear, sequential approach, where a machine can do these steps in order, this design allowed for moving around or jumping to specific points in code. This concept lead to branching and conditional statements, such as IF (instruction) THEN (instruction) as well as looping with a FOR command. This idea of “conditional control transfer” led to the concept of “libraries” and “reuse,” each of which are cornerstones of software engineering principles and quality code.

Building off of Turing

In 1938, Konrad Zuse built the first mechanical binary programmable computer. Then, in 1943, Thomas Flowers built Colossus, which many consider to be the first all-programmable digital computer. It was used to decipher encrypted messages between Adolf Hilter and his generals during World War II. Machines had not yet been capable to be general purpose; rather, they did a specific function for a specific purpose. The first commercial computer was delivered to the U.S. Bureau of the Census in 1951. It was called UNIVAC I and it was the first computer used to predict the presidential election outcome.

Early Programming Languages

Early programming languages were written in machine language, where every instruction or task was written in an on or off state, which a machine can understand and execute. Recall that on is the same as a decimal value 1, while off is a decimal value of 0. These simple on and off states are the essence of digital circuitry.

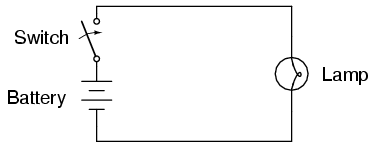

Think of a light switch on your wall. You flip the switch in one direction and the light comes on. Flip it the other direction and the light goes off. This switch’s circuit is similar to the simple electrical circuit diagram in Figure 1. The switch on your wall opens and closes the circuit. When closed, the electricity flows freely through the power source (a battery in this case) to the switch on your wall and then to the lamp in your room, which turns the light on. When the switch is open (as it is shown in the diagram), the circuit is broken and no electricity flows, which turns the light off.

Think of a light switch on your wall. You flip the switch in one direction and the light comes on. Flip it the other direction and the light goes off. This switch’s circuit is similar to the simple electrical circuit diagram in Figure 1. The switch on your wall opens and closes the circuit. When closed, the electricity flows freely through the power source (a battery in this case) to the switch on your wall and then to the lamp in your room, which turns the light on. When the switch is open (as it is shown in the diagram), the circuit is broken and no electricity flows, which turns the light off.

Now think of the on state as a value of 1. When the switch is closed (picture pushing the switch down until it touches wires on both sides), power flows, the light comes on, and the value is 1 or on. Invert that thought process. Open the switch. What happens? The switch is open, the circuit is broken, power stops flowing, the light goes off, and the value is 0 or off.

Within a machine, past and present, powering through circuits are represented by 1s and 0s. Machine language uses these 1 and 0 values to turn on and off states within the machine. Combining these 1s and 0s allows the programmer to get the machine to do what is needed. We will cover machine logic and language later. The takeaway for now is:

- An “on” state is represented by a decimal value of 1.

- An “off” state is represented by a decimal value of 0.

This combination of 1s and 0s in the first programming languages was manually converted into a binary representation. This is machine code.

Imagine programming in just 1s and 0s. You would need to code each and every step to tell the machine do this, then that, and so on. Let’s see what steps you would walk through to compute the following equation: A = B + C.

Steps to compute A = B + C in Machine Code

In machine code, the first part of the binary code represents the task to be done. It is a code in itself. Therefore, a load instruction may be 0010, an add instruction may be 1010, and a store may be 1100. Using these instructions, let’s break down the steps required to tell the computer how to solve the equation A = B + C.

Note: In this next section, don’t worry about understanding binary code, as we will explain the binary numbering system later in this course. The memory locations and instructions are arbitrary. If you want to see what the decimal value converts to, you can use a converter such as this one.

Step 1: Load B

First, we need to load the data stored in B into a working memory location for us to manipulate and use. In machine code, this means we are doing a load instruction. We tell the computer to go and grab the location where B is stored in memory (e.g. memory location 1) and then put it (load it) into the working memory (e.g. at location 11). In binary code, this task becomes:

0010 1011 0001

where:

- 0010 is the Load instruction

- 1011 (decimal value 11) is the working memory location of where to store B

- 0001 (decimal value 1) is the storage memory location of B, i.e. where it is stored.

Let’s do the next step.

Step 2: Load C

Just like in Step 1, we need to load the data for C into memory in order to work on it. Therefore, it is a load instruction again, where C is in memory location 2 and we put it into the working memory location of 12.

0010 1100 0010

where:

- 0010 is the Load instruction

- 1100 (decimal value 12) is the working memory location of where to store C

- 0010 (decimal value 2) is the storage memory location of C, i.e. where it is stored.

At this point, the computer has both B and C loaded into the working memory. Now we can do the next step.

Step 3: Add the numbers in working memory

In this step we add the two numbers together. Therefore, we need to tell the computer to do an add instruction, store it into a memory location (e.g. 5) using the memory location for B (which is 11 from above) and C (which is 12 from above).

1010 1101 1011 1100

where:

- 1010 is the add instruction

- 1101 (or decimal 13) is the memory location where to store the result.

- 1011 (or decimal 11) is the memory location where B is stored in working memory.

- 1100 (or decimal 12) is the memory location where C is stored in working memory.

Now we have a result for A. Next we need to store it.

Step 4: Store the result

It’s time to store the result into a stored memory location. We need to tell the computer to do a store instruction from memory location 13 into the memory location (3).

1100 0011 1101

where:

- 1100 is the store instruction

- 0011 (or decimal 3) is the memory location where to store the result.

- 1101 (or decimal 13) is the memory location where result is stored in working memory.

Congratulations, you just wrote machine code.

Complete machine code

The complete machine code for A = B + C is:

0010 1011 0001

0010 1100 0010

1010 1101 1011 1100

1100 0011 1101

Wrap it Up

Looking at the steps and having to think in binary, remember the instruction in binary code, and then ensure you don’t make a mistake would be very tedious and time-consuming. It’s no wonder that the early programmers were mathematicians and engineers.

Your Code to Machine Code

The binary code you just stepped through is the code your human-readable code is eventually converted into after it goes through the parsing, translation, and final conversion processes.

Working hour after hour in numbers is not efficient. As we will see in the next section, our role expands as translators were invented, thereby allowing our role to abstract away the machine code into a form we could use and understand.

Code. Eat. Code. Sleep. Dream about Code. Code.

Episodes

Total Lab Runtime: 01:07:56